On this scenario we will talk about how to implement HA (High Availability) for OVM 3.1 between a Primary Site (Production) and a Secondary Site (DR) located at 800 km away. On this post we will only talk about the storage replication part and how to make it work when a failover is required (I will not cover the Database replication side (Using Data Guard).

Please note that the Oracle VM Manager should be installed on both sites with the same UUID using the “./runInstaller.sh –uuid <uuid>” command. The UUID for the Oracle VM manager could be found in the configuration file of the OVM manager.

$ cat /u01/app/oracle/ovm-manager-3/.config | grep -i uuid

The Preparation

1) Identify the source LUN in the production OVM Manager we want to HUR to the DR site. (360060e8005449c000000449c00004606)

2) Present the LUN to the DR site.

Also specify the HUR source LUN (360060e8005449c000000449c00004606) and HUR target LUN (You will get the LUN ID from the SAN Engineers when they present the LUN to the DR site).

Ask the San Engineers to activate the HUR process.

The Failover

In the event of a primary site failure, DR procedures will be kicked off as shown below.

1) Production site is down, the storage replication synchronization between the production site and the DR site would have to be stopped.

2) Since these are block devices, there will be an OCFS2 filesystem on the devices. You will not be able to present the replicated repositories to the OVM servers at the DR site because of the cluster ID tamped into the repositories. This will cause mounting of the OCFS2 filesystems at the DR site to fail.

“mount.ocfs2: Cluster name is invalid while trying to join the group”

3) Find the former cluster ID stamped on the replicated repository.

We know what the target LUN ID should be (When the SAN Engineers presented the LUN to the DR site) eg 360060e8005458e000000458e00004075

Find the former cluster ID stamped on the replicated repository. (360060e8005458e000000458e00004075)

Cluster ID = 16a67562c33aa4fe

4) Find the new OCFS2 cluster ID at DR site.

5) Change the clusterID of the replicated repository (360060e8005458e000000458e00004075)

$ tunefs.ocfs2 –update-cluster-stack /dev/sde (360060e8005458e000000458e00004075)

Run fsck.ocfs2 /dev/sde

Y to continue.

Y

6) Check that cluster ID is already updated.

7) Repositories before refresh ( service ovmm stop / start)

8) After repositories refresh ( service ovmm stop / start)

Log onto DR OVM Manager. (console)

9) You should now see a VM under the unassigned Virtual Machines tag.

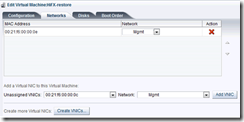

10) Edit VM and replace network adapter.

11) Migrate VM to a host.

12) Start VM.

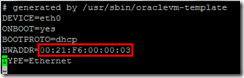

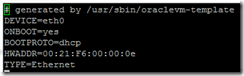

13) Configure MAC address and ip address. (VM OS)

Edit /etc/sysconfig/network-scripts/ifcfg-eth0

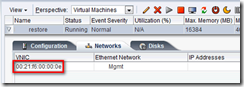

Get new MAC address from DR OVM Manager.

Update /etc/sysconfig/network-scripts/ifcfg-eth0 with the new MAC address.

14) Service network restart.

Regards,

Francisco Munoz Alvarez

Thanks, this is what i needed!

Regards,

Savo

Hi, when try to execute the fsck.ocfs2 command return the following error:

fsck.ocfs2: I/O error on channel while recovering cluster information

Regards.

Replace “./runInstaller.sh –uuid ” with “./runInstaller.sh –uuid ”.

Double ‘-‘ before ‘uuid’ is quite important.